Economics and Real Estate

The New Cost-Per-Megawatt Calculation

James Murphy

10/28/20252 min read

🌐 The AI Infrastructure Revolution: Redefining Value from Land to Latency

In the rush for Artificial Intelligence (AI) dominance, a silent but profound economic shift is underway. The massive buildout of AI infrastructure—from hyperscale data centers to specialized chips—is completely rewriting the rules of business, real estate, and technology. This post, the next in our series, dives into the three core economic models being reshaped by the AI boom.

1. 🏗️ Real Estate's New Mandate: From Location to Megawatt Capacity

The AI gold rush is transforming traditional commercial real estate valuation. Land value is no longer determined primarily by its proximity to urban centers or highway access, but by its connection to raw power and high-speed data.

The Power Grid is the New Waterfront: The most valuable land parcels are now those with guaranteed, massive, and reliable access to power grids and high-capacity fiber optic backbones. This demand is fueling a surge in industrial real estate values in emerging tech states like Ohio and North Carolina.

Residential Land Repurposed for Compute: We’re seeing a radical shift where low-value residential or commercial properties near electrical substations or key energy arteries are being purchased, often at a premium, to be torn down and replaced with power-hungry data center sites.

The Valuation Pivot: This shift means real estate value is increasingly measured by its potential Megawatt Capacity rather than its traditional "cost per square foot" metric. For real estate investors, understanding the utility landscape is now the single most critical factor.

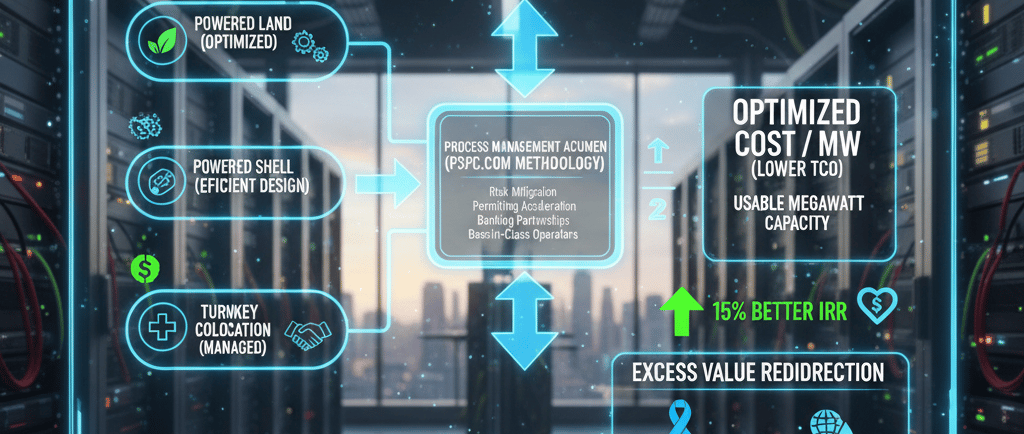

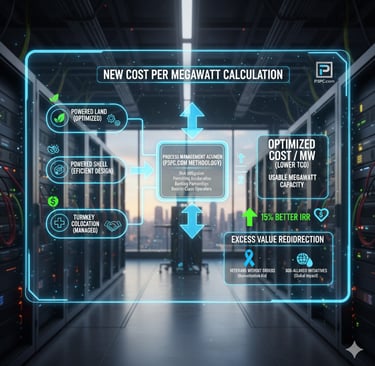

2. ⚡ The Shifting Metric: From Cost Per Foot to Cost Per Inference

The central economic goal for every major tech player is efficiency—specifically, how efficiently they can run their AI models. This changes the metric of success in the data center industry.

The End of Square Footage: The traditional focus on "cost per square foot" is obsolete. The primary concerns are now highly specialized: "Cost Per Inference" and "Cost Per Megawatt."

Energy Efficiency is Profit: Companies are in a race to engineer the most energy-efficient data centers possible. The objective is to squeeze the maximum number of AI calculations (inferences) out of every single watt of electricity consumed.

AI Infrastructure Economics: An optimized data center minimizes cooling, maximizes compute density, and streamlines power delivery. This allows firms to reduce the Total Cost of Ownership (TCO) for their AI operations, directly translating to a competitive advantage. Finding the optimal operators is crucial for achieving this efficiency.

3. 🚀 The Ultimate Goal: Reducing Latency for the End-User

While the trillions of dollars flow into land acquisition and specialized chips (like GPUs), the entire cycle ultimately serves a singular, customer-facing goal: an indispensable end-user experience.

AI as a Utility: Just like electricity or running water, AI is rapidly cementing itself as an indispensable utility for consumers and businesses alike. Speed is critical to utility adoption.

The Need for Speed (Low Latency): Every element of the build cycle—from venture capital funding specialized chips to constructing power-hungry hyperscale data centers—is aimed at reducing latency and the final cost of AI inference.

Impact on Daily Life:

Faster inference means search results appear instantly.

Low latency delivers seamless, highly personalized content recommendations.

Flawless conversational AI (like chatbots and virtual assistants) becomes a reality.

The faster and cheaper the compute, the better the final digital experience, ensuring AI becomes a continuous, reliable part of our lives.

Connect

Partnering for a better Municipalities everywhere.

#IMPACT

#SmartCommunity

© 2025. All rights reserved.